Multispectral Imagery:

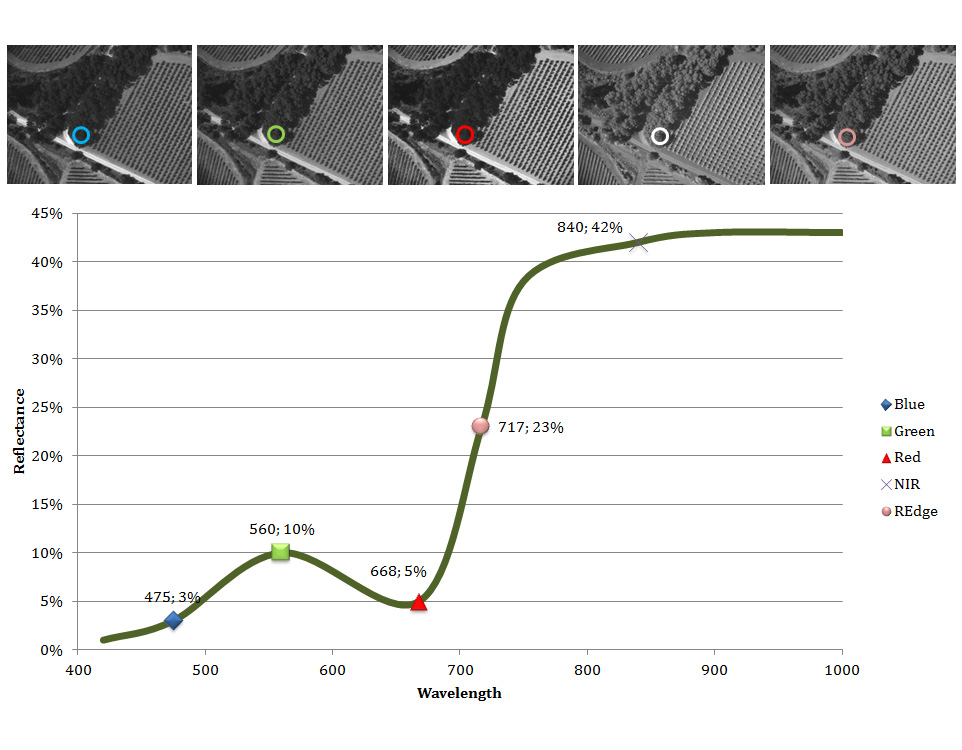

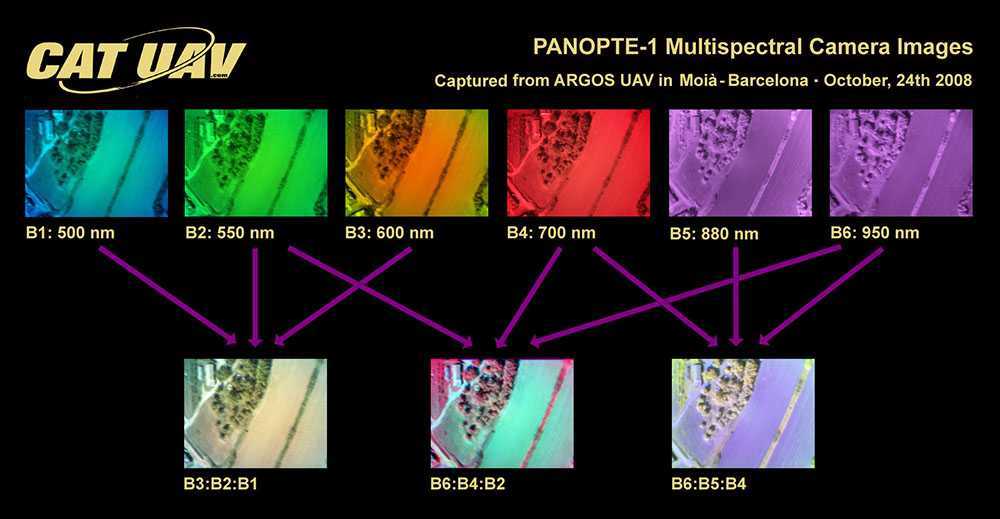

Multispectral imagery allows the acquisition of data in multiple electromagnetic spectrum wavelengths. The multispectral data can be acquired at any spectrum wavelength, but the most commonly sensed areas in UAV applications are visible (380 to 740 nm), near-infrared (740 to 1400 nm) and long wavelength infrared (8 to 15 µm). Thanks to multispectral data we can get information of the sensed objects further away the capabilities of the human eye, being able to combine that data using remote sensing techniques to get relevant information of the surveilled objects. Depending on the spectral bands used different properties can be estimated, such as vegetation vigor, humidity or temperature. One of the fields in which multispectral image is more widely used is in vegetation studies, especially in precision agriculture. Cameras that are specially designed for this kind of applications can get information to distinguish between high and low vigor plants.

Hyperspectral Imagery:

Multispectral data is typically limited between only 3 to 15 different spectral bands. Although in some standard applications that number of bands is more than enough, some scientific and technical solutions may require more bands. If that’s the case, hyperspectral cameras can be used, which can get more than 100 bands. Furthermore, each spectral band has a narrower bandwidth of up to 1 nm. This big improvement in spectral resolution brings the capability to precisely differentiate between different sensed objects, opening the door to high precision applications such as vegetal species identification. This kind of sensors can be useful in early research to properly determine which are the spectral bands that can give more relevant information about the objects or phenomenon that we are studying.

Radiometric Calibration

The most common magnitude measured when working with multispectral imagery is reflectance, which is the fraction of incident light that is reflected in the sensed surface. However, this magnitude is affected with multiple factors, such as atmosphere conditions, time of the year or drone flight altitude. In order to have comparable information between different flights or sources of data the images must be calibrated. This is achieved using calibration targets photographed during the operation and applying custom image processing techniques depending on the camera used.